利用pytorch 训练常见分类网络

发布于2019-08-07 14:51 阅读(1777) 评论(0) 点赞(1) 收藏(1)

自己数据集制作

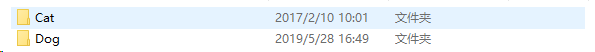

这里以kaggle比赛猫狗两类数据集为例,

首先根据数据集制作一个txt的路径文档,代码如下

import os

from PIL import Image

path='E:\dataset\kaggle_cat_dog\PetImages/'

filetrain=open("train.txt",'w')

filetest=open("test.txt",'w')

num=0

for class_pet in os.listdir(path):

for img in os.listdir(os.path.join(path,class_pet)):

_, ext = os.path.splitext(img)

absolute_path=os.path.join(path,class_pet,img)

if ext=='jpg'or 'png'and num%8!=0:

filetrain.writelines(absolute_path+" 0"+'\n') if class_pet=='Cat' else filetrain.writelines(absolute_path+" 1"+"\n")

elif ext=='jpg' or 'png' and num%8==0:

filetest.writelines(absolute_path + " 0" + '\n') if class_pet == 'Cat' else filetest.writelines(

absolute_path + " 1" + "\n")

num+=1

filetrain.close()

filetest.close()其中通过num%8将数据集按照8:1分成了训练集与测试集。最终得到了两个txt文件夹。

然后将数据集加载并读取,代码为

import torch

import torchvision

import torchvision.transforms as transforms

from PIL import Image

from torch.utils.data import DataLoader,Dataset

class MyDataset(Dataset):

def __init__(self,root,datatxt,transform=None):

super(MyDataset,self).__init__()

fh=open(root+datatxt,'r')

imgs=[]

for line in fh:

line=line.rstrip()

words=line.split()

imgs.append((words[0],int(words[1])))

self.imgs=imgs

self.transform=transform

def __getitem__(self,index):

fn,label=self.imgs[index]

img=Image.open(fn).convert('RGB')

if self.transform is not None:

img=self.transform(img)

return img,label

def __len__(self):

return len(self.imgs)

transform_train = transforms.Compose([

transforms.RandomCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

])

transform_test = transforms.Compose([

transforms.ToTensor(),

])

trainset=MyDataset(root='E:/tensorflow\SENet-Tensorflow-master/',datatxt='train.txt',transform=transform_train)

trainloader=DataLoader(trainset,batch_size=8,shuffle=True)

testset=MyDataset(root='E:/tensorflow\SENet-Tensorflow-master/',datatxt='test.txt',transform=transform_test)

testloader=DataLoader(testset,batch_size=8,shuffle=True)接下来是添加网络模型,以vgg16为例

import torch

import torch.nn as tnn

import torchvision.datasets as dsets

import torchvision.transforms as transforms

from torch.autograd import Variable

class VGG16(tnn.Module):

def __init__(self):

super(VGG16, self).__init__()

self.layer1 = tnn.Sequential(

# 1-1 conv layer

tnn.Conv3d(3, 64, kernel_size=3, padding=1),

tnn.BatchNorm3d(64),

tnn.ReLU(),

# 1-2 conv layer

tnn.Conv3d(64, 64, kernel_size=3, padding=1),

tnn.BatchNorm3d(64),

tnn.ReLU(),

# 1 Pooling layer

tnn.MaxPool3d(kernel_size=2, stride=2))

self.layer2 = tnn.Sequential(

# 2-1 conv layer

tnn.Conv3d(64, 128, kernel_size=3, padding=1),

tnn.BatchNorm3d(128),

tnn.ReLU(),

# 2-2 conv layer

tnn.Conv3d(128, 128, kernel_size=3, padding=1),

tnn.BatchNorm3d(128),

tnn.ReLU(),

# 2 Pooling lyaer

tnn.MaxPool3d(kernel_size=2, stride=2))

self.layer3 = tnn.Sequential(

# 3-1 conv layer

tnn.Conv3d(128, 256, kernel_size=3, padding=1),

tnn.BatchNorm3d(256),

tnn.ReLU(),

# 3-2 conv layer

tnn.Conv3d(256, 256, kernel_size=3, padding=1),

tnn.BatchNorm3d(256),

tnn.ReLU(),

# 3 Pooling layer

tnn.MaxPool3d(kernel_size=2, stride=2))

self.layer4 = tnn.Sequential(

# 4-1 conv layer

tnn.Conv3d(256, 512, kernel_size=3, padding=1),

tnn.BatchNorm3d(512),

tnn.ReLU(),

# 4-2 conv layer

tnn.Conv3d(512, 512, kernel_size=3, padding=1),

tnn.BatchNorm3d(512),

tnn.ReLU(),

# 4 Pooling layer

tnn.MaxPool3d(kernel_size=2, stride=2))

self.layer5 = tnn.Sequential(

# 5-1 conv layer

tnn.Conv3d(512, 512, kernel_size=3, padding=1),

tnn.BatchNorm3d(512),

tnn.ReLU(),

# 5-2 conv layer

tnn.Conv3d(512, 512, kernel_size=3, padding=1),

tnn.BatchNorm3d(512),

tnn.ReLU(),

# 5 Pooling layer

tnn.MaxPool3d(kernel_size=2, stride=2))

self.layer6 = tnn.Sequential(

# 6 Fully connected layer

# Dropout layer omitted since batch normalization is used.

tnn.Linear(4096, 4096),

tnn.BatchNorm1d(4096),

tnn.ReLU())

self.layer7 = tnn.Sequential(

# 7 Fully connected layer

# Dropout layer omitted since batch normalization is used.

tnn.Linear(4096, 4096),

tnn.BatchNorm1d(4096),

tnn.ReLU())

self.layer8 = tnn.Sequential(

# 8 output layer

tnn.Linear(4096, 2),

tnn.BatchNorm1d(2),

tnn.Softmax())

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.layer5(out)

vgg16_features = out.view(out.size(0), -1)

out = self.layer6(vgg16_features)

out = self.layer7(out)

out = self.layer8(out)

return vgg16_features, out模型定义结束后,开始训练:

import time

import torch.nn as nn

import torch.nn.parallel

import torch.backends.cudnn as cudnn

import torch.optim

import torch.utils.data

class AverageMeter(object):

"""Computes and stores the average and current value"""

def __init__(self):

self.reset()

def reset(self):

self.val = 0

self.avg = 0

self.sum = 0

self.count = 0

def update(self, val, n=1):

self.val = val

self.sum += val * n

self.count += n

self.avg = self.sum / self.count

def accuracy(output, target, topk=(1,)):

"""Computes the precision@k for the specified values of k"""

maxk = max(topk)

batch_size = target.size(0)

_, pred = output.topk(maxk, 1, True, True)

pred = pred.t()

correct = pred.eq(target.view(1, -1).expand_as(pred))

res = []

for k in topk:

correct_k = correct[:k].view(-1).float().sum(0)

res.append(correct_k.mul_(100.0 / batch_size))

return res

def train(train_loader, model, criterion, optimizer, epoch):

batch_time = AverageMeter()

losses = AverageMeter()

top1 = AverageMeter()

# switch to train mode

model.train()

end = time.time()

for i, (input, target) in enumerate(trainloader):

target = target.cuda(async=True)

input = input.cuda()

input_var = torch.autograd.Variable(input)

target_var = torch.autograd.Variable(target)

# compute output

output = model(input_var)

loss = criterion(output, target_var)

# measure accuracy and record loss

prec1 = accuracy(output.data, target, topk=(1,))[0]

losses.update(loss.data[0], input.size(0))

top1.update(prec1[0], input.size(0))

# compute gradient and do SGD step

optimizer.zero_grad()

loss.backward()

optimizer.step()

# measure elapsed time

batch_time.update(time.time() - end)

end = time.time()

if i % args.print_freq == 0:

print('Epoch: [{0}][{1}/{2}]\t'

'Time {batch_time.val:.3f} ({batch_time.avg:.3f})\t'

'Loss {loss.val:.4f} ({loss.avg:.4f})\t'

'Prec@1 {top1.val:.3f} ({top1.avg:.3f})'.format(

epoch, i, len(train_loader), batch_time=batch_time,

loss=losses, top1=top1))

测试函数为

def validate(val_loader, model, criterion, epoch):

"""Perform validation on the validation set"""

batch_time = AverageMeter()

losses = AverageMeter()

top1 = AverageMeter()

# switch to evaluate mode

model.eval()

end = time.time()

for i, (input, target) in enumerate(testloader):

target = target.cuda(async=True)

input = input.cuda()

input_var = torch.autograd.Variable(input, volatile=True)

target_var = torch.autograd.Variable(target, volatile=True)

# compute output

output = model(input_var)

loss = criterion(output, target_var)

# measure accuracy and record loss

prec1 = accuracy(output.data, target, topk=(1,))[0]

losses.update(loss.data[0], input.size(0))

top1.update(prec1[0], input.size(0))

# measure elapsed time

batch_time.update(time.time() - end)

end = time.time()

if i % args.print_freq == 0:

print('Test: [{0}/{1}]\t'

'Time {batch_time.val:.3f} ({batch_time.avg:.3f})\t'

'Loss {loss.val:.4f} ({loss.avg:.4f})\t'

'Prec@1 {top1.val:.3f} ({top1.avg:.3f})'.format(

i, len(val_loader), batch_time=batch_time, loss=losses,

top1=top1))

print(' * Prec@1 {top1.avg:.3f}'.format(top1=top1))

# log to TensorBoard

#if args.tensorboard:

# log_value('val_loss', losses.avg, epoch)

# log_value('val_acc', top1.avg, epoch)

return top1.avg

def accuracy(output, target, topk=(1,)):

"""Computes the precision@k for the specified values of k"""

maxk = max(topk)

batch_size = target.size(0)

_, pred = output.topk(maxk, 1, True, True)

pred = pred.t()

correct = pred.eq(target.view(1, -1).expand_as(pred))

res = []

for k in topk:

correct_k = correct[:k].view(-1).float().sum(0)

res.append(correct_k.mul_(100.0 / batch_size))

return res

最后添加主函数:

model = VGG16()

model = model.cuda()

criterion = nn.CrossEntropyLoss().cuda()

optimizer = torch.optim.SGD(model.parameters(), 0.001,

momentum=0.9,

nesterov=True,

weight_decay=1*e-4)

for epoch in range(0, 200):

adjust_learning_rate(optimizer, epoch)

# train for one epoch

train(trainloader, model, criterion, optimizer, epoch)

# evaluate on validation set

prec1 = validate(testloader, model, criterion, epoch)

best_prec1=0

is_best = prec1 > best_prec1

best_prec1 = max(prec1, best_prec1)

print('Best accuracy: ', best_prec1)代码中的文件路径按照自己文件夹做相应修改。

所属网站分类: 技术文章 > 博客

作者:dkjf787

链接:https://www.pythonheidong.com/blog/article/11397/9ff13f1bc7228fcecf5e/

来源:python黑洞网

任何形式的转载都请注明出处,如有侵权 一经发现 必将追究其法律责任

昵称:

评论内容:(最多支持255个字符)

---无人问津也好,技不如人也罢,你都要试着安静下来,去做自己该做的事,而不是让内心的烦躁、焦虑,坏掉你本来就不多的热情和定力