Pytorch学习第六讲:实现裁剪后的resnet50

发布于2019-08-22 16:43 阅读(1528) 评论(0) 点赞(18) 收藏(2)

先上一个pytorch里自带的一个版本

这个版本实现的比较简洁优雅,但如果你想自己改变一些参数,就比较难调,所以这篇文章,会基于该版本中最基本的一个bottleblock来做一个自己实现的版本,这个版本更方便修改参数,调试等。

import torch.nn as nn

import torch.nn.functional as F

import math

import torch

def conv3x3(in_plane, out_plane, stride=1):

return nn.Conv2d(in_plane, out_plane, kernel_size=3, stride=stride, padding=1, bias=True)

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, in_planes, planes, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = conv3x3(in_planes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm1d(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

class BottleBlock(nn.Module):

expansion = 4

def __init__(self, in_planes, planes, stride, downsample=None):

super(BottleBlock, self).__init__()

self.conv1 = nn.Conv2d(in_planes, planes, kernel_size=1, bias=True)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = conv3x3(planes, planes, stride)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, planes*4, kernel_size=1, bias=True)

self.bn3 = nn.BatchNorm2d(planes * 4)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

residual = self.downsample(x)

print(residual.shape)

print(x.shape)

print(out.shape)

out += residual

out = self.relu(out)

return out

class VVResNet(nn.Module):

def __init__(self, block, layers, num_classes=1000):

self.inplanes=64

# Special attributes

self.input_space=None

self.input_size=(299, 299, 3)

self.mean = None

self.std = None

super(VVResNet, self).__init__()

# Modules

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=True)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.last_layer = nn.Linear(512*block.expansion, num_classes)

# initialize params

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def _make_layer(self, block, planes, blocks, stride=1):

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=True),

nn.BatchNorm2d(planes * block.expansion),

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample))

self.inplanes = planes * block.expansion

for i in range(1, blocks):

layers.append(block(self.inplanes, planes))

return nn.Sequential(*layers)

def features(self, input):

x = self.conv1(input)

self.conv1_input = x.clone()

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

return x

def logits(self, features):

adaptiveAvgPoolWidth = features.shape[2]

x = F.avg_pool2d(features, kernel_size=adaptiveAvgPoolWidth)

x = x.view(x.size(0), -1)

x = self.last_layer(x)

return x

def forward(self, input):

x = self.features(input)

x = self.logits(x)

return x

def VVresnet50(num_classes=1000):

# model = VVResNet(BottleBlock, [3, 4, 6, 3], num_classes=num_classes)

model = VVResNet(BottleBlock, [1, 1, 1, 1], num_classes)

return model

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

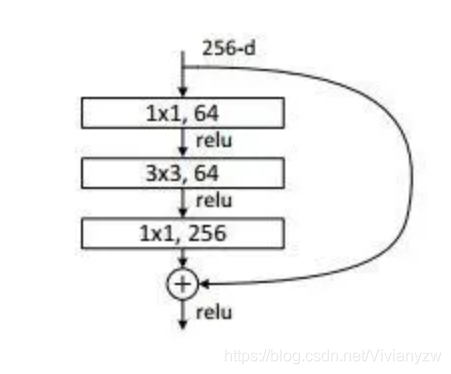

这个版本,只有bottleblock一个基本的单元,其他就按照resnet的各类操作,序列式的搭建了一下网络,bottleblock基本单元如下:

def conv3x3(inplanes, outplanes, stride=1):

return nn.Conv2d(inplanes, outplanes, kernel_size=3, stride=stride, padding=1, bias=True)

class BottleBlock(nn.Module):

def __init__(self, inplanes, planes, stride):

super(BottleBlock, self).__init__()

self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=True)

self.bn1 = nn.BatchNorm2d(planes)

self.conv2 = conv3x3(planes, planes, stride)

self.bn2 = nn.BatchNorm2d(planes)

self.conv3 = nn.Conv2d(planes, planes*4, kernel_size=1, bias=True)

self.bn3 = nn.BatchNorm2d(planes * 4)

self.relu = nn.ReLU(inplace=True)

self.downsample = nn.Sequential(

nn.Conv2d(inplanes, planes*4, kernel_size=1, stride=stride, bias=True),

nn.BatchNorm2d(planes*4)

)

def forward(self, x):

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

此段代码实现的就是论文中的这个结构:

那么有了这个基础结构,其他的部分,就很方便实现了:

当然原文中resnet50的block部分,是使用了[2,3,8,3]的block,我这里给简化成了[1,1,1,1],如果你想增加block数,就在这个基础上增加self.block就好啦。参数自己随意定义,只要通道数等参数设置正确了就可以了。这样的实现方式在调整网络参数时更加方便了。

class VVResnet50(nn.Module):

def __init__(self):

super(VVResnet50, self).__init__()

self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3, bias=True)

self.bn1 = nn.BatchNorm2d(64)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(3, 2, 1)

self.block1 = BottleBlock(64, 64, 1)

self.block2 = BottleBlock(256, 128, 2)

self.block3 = BottleBlock(512, 256, 2)

self.block4 = BottleBlock(1024, 512, 2)

self.last_layer = nn.Linear(2048, 1000)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = F.avg_pool2d(x, 7)

x = x.view(x.size(0), -1)

x = self.last_layer(x)

return x

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

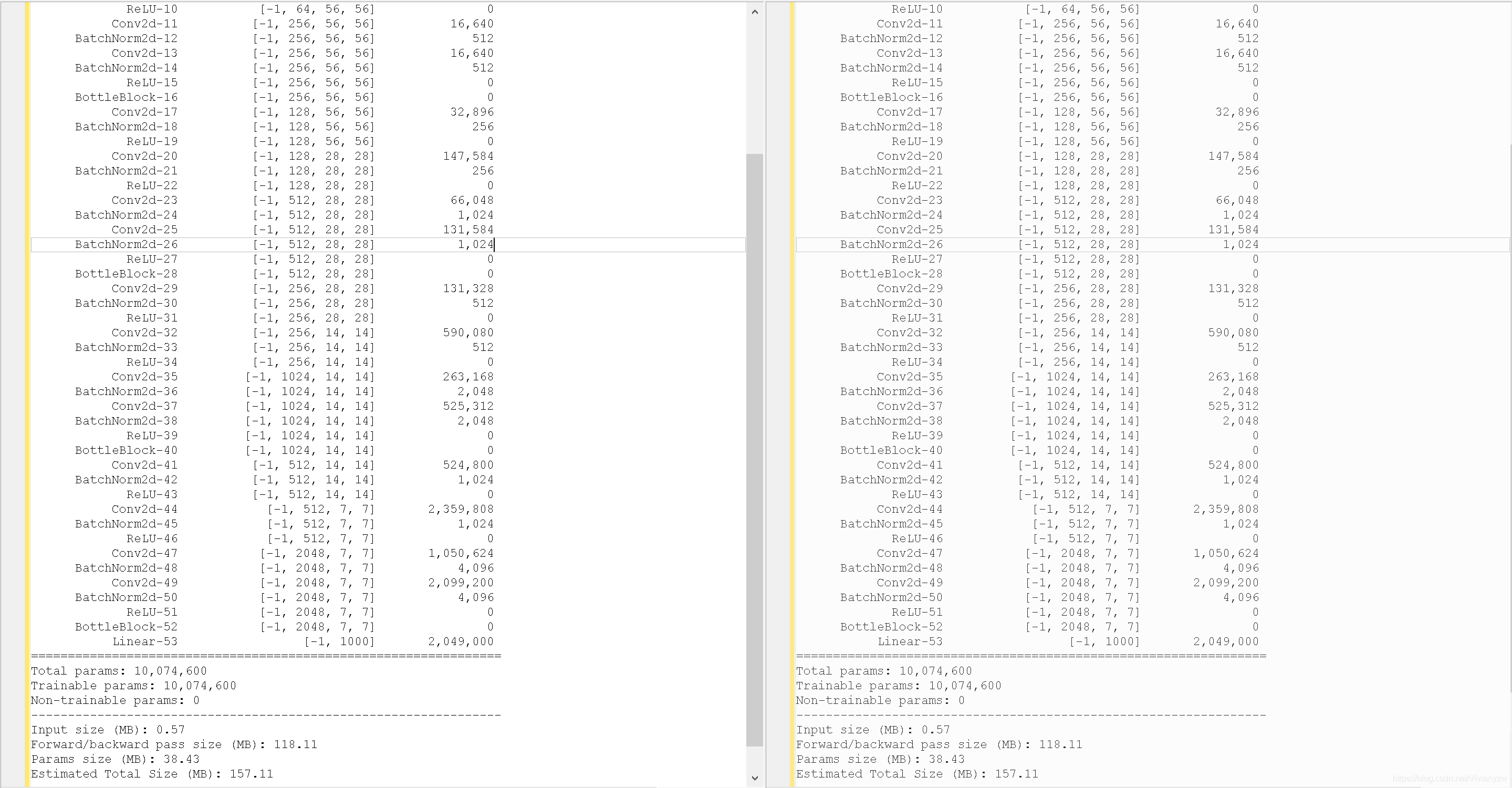

可以通过下列代码查看一下网络参数:

if __name__ == "__main__":

from torchsummary import summary

model = VVResnet50()

input = torch.randn(size=(1, 3, 224, 224), dtype=torch.float32)

# print(model)

summary(model, (3, 224, 224), device='cpu')

- 1

- 2

- 3

- 4

- 5

- 6

我也打印了两个实现方式网络,完全相同:

所属网站分类: 技术文章 > 博客

作者:皇后娘娘别惹我

链接:https://www.pythonheidong.com/blog/article/52993/1c18e49dd3eefbdeb8f7/

来源:python黑洞网

任何形式的转载都请注明出处,如有侵权 一经发现 必将追究其法律责任

昵称:

评论内容:(最多支持255个字符)

---无人问津也好,技不如人也罢,你都要试着安静下来,去做自己该做的事,而不是让内心的烦躁、焦虑,坏掉你本来就不多的热情和定力